Michael Wray1, Diane Larlus2, Gabriela Csurka2 and Dima Damen1

1University of Bristol, 2Naver Labs

Abstract

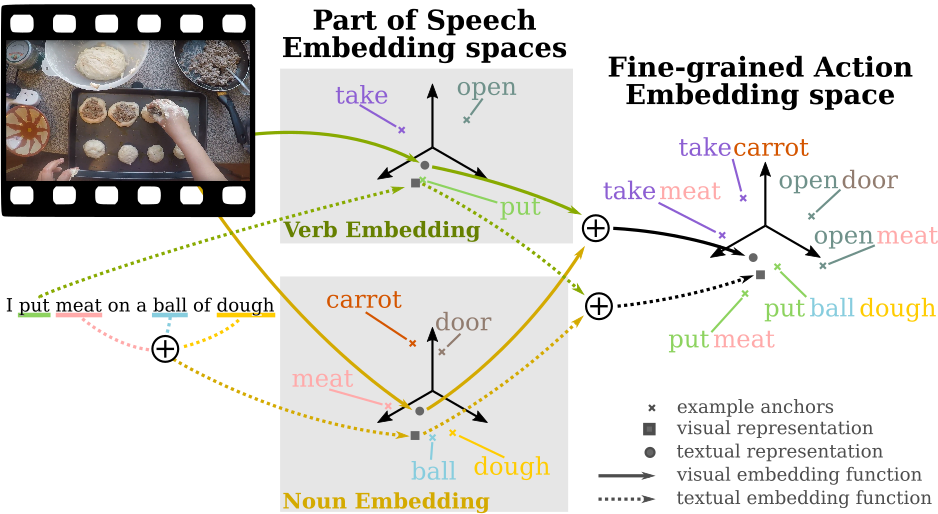

We address the problem of cross-modal fine-grained action retrieval between text and video. Cross-modal retrieval is commonly achieved through learning a shared embedding space, that can indifferently embed modalities.

In this paper, we propose to enrich the embedding by disentangling parts-of-speech (PoS) in the accompanying captions. We build a separate multi-modal embedding space for each PoS tag. The outputs of multiple PoS embeddings are then used as input to an integrated multi-modal space, where we perform action retrieval. All embeddings are trained jointly through a combination of PoS-aware and PoS-agnostic losses. Our proposal enables learning specialised embedding spaces that offer multiple views of the same embedded entities.

We report the first retrieval results on fine-grained actions for the large-scale EPIC dataset, in a generalised zero-shot setting. Results show the advantage of our approach for both video-to-text and text-to-video action retrieval.

We also demonstrate the benefit of disentangling the PoS for the generic task of cross-modal video retrieval on the MSR-VTT dataset.

Video

Paper

Poster

Poster presented at ICCV can be found here

Code and Features

The code and download links for the features can be found here.

Bibtex

@InProceedings{wray2019fine,

author = {Wray, Michael and Larlus, Diane and Csurka, Gabriela and Damen, Dima},

title = {Fine-Grained Action Retrieval through Multiple Parts-of-Speech Embeddings},

booktitle = {IEEE/CVF International Conference on Computer Vision (ICCV)},

year = {2019}

}

Acknowledgements

This research is supported by EPSRC LOCATE (EP/N033779/1) and EPSRC Doctoral Training Partnerships (DTP). Part of this work was carried out during Michael’s internship at Naver Labs Europe.