Michael Wray, Hazel Doughty* and Dima Damen

University of Bristol

Abstract

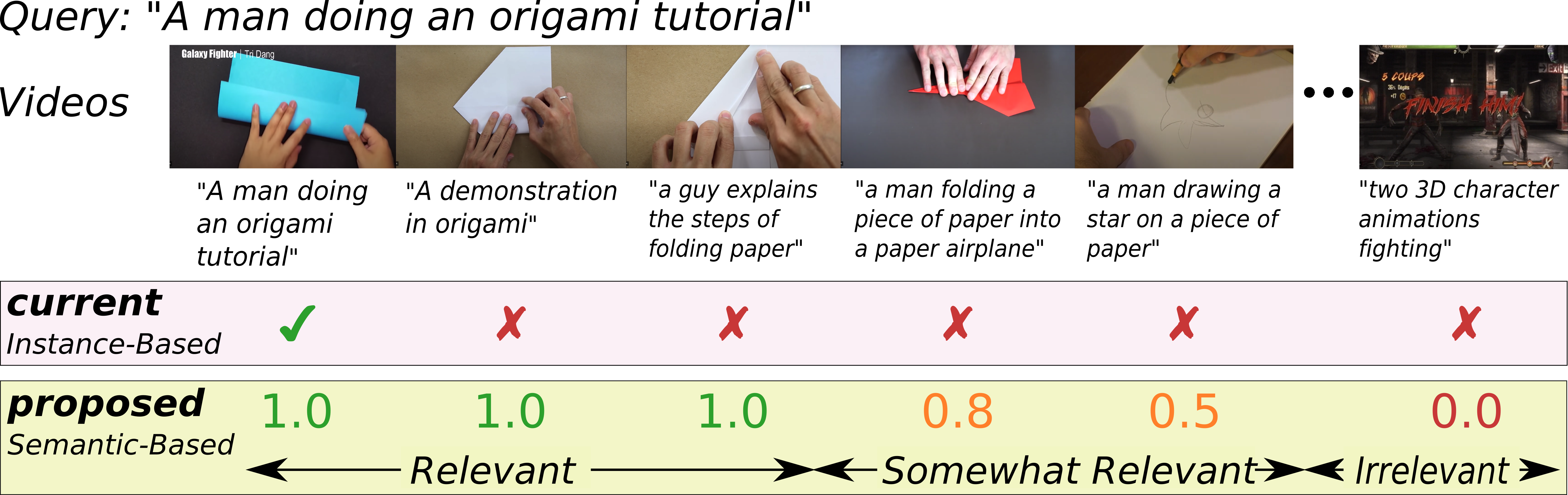

Current video retrieval efforts all found their evaluation on an instance-based assumption, that only a single caption is relevant to a query video and vice versa. In this paper, we demonstrate this assumption results in performance comparisons often not indicative of models’ retrieval capabilities. We propose a move to semantic similarity video retrieval, where (i) multiple videos can be deemed equally relevant, and their relative ranking does not affect a method’s reported performance and (ii) retrieved videos (or captions) are ranked by their similarity to a query. We propose several proxies to estimate semantic similarities in large-scale retrieval datasets, without additional annotations. Our analysis is performed on three commonly used video retrieval benchmark datasets (MSR-VTT, YouCook2 and EPIC-KITCHENS).

Video

Paper

Bibtex

@inproceedings{wray2021semantic,

title={On Semantic Similarity in Video Retrieval},

author={Wray, Michael and Doughty, Hazel and Damen, Dima},

booktitle={CVPR},

year={2021}

}

Github

Information for the semantic retrieval benchmark task is coming soon on github.

*Now at University of Amsterdam